You know, some days I can really relate to Inspector Harry Callahan. Some days, it feels like being an IDX vendor is a dirty job so unappreciated that only Dirty Harry could fully appreciate it.

Recently, I’ve heard that the NWMLS decided to enact a few more rule changes. Needless to say, I’m all broken up about the new NWMLS rules. The good news is that there will no longer be a 3 download agreement limit. This should allow members to more easily work with multiple vendors, and perhaps better allow members to easily find cost-effective solutions to their IT problems. I think it’s a good idea because it could create more demand for the services I can provide.

The bad news is that starting in October, the NWMLS will charge each entity downloading the IDX data (i.e. the consultant or the broker for an in-house data feed) $30 per month, per agreement. For example, if a vendor A has a download agreement with office A, B, and C, then the vendor will be charged $90 per month. Needles to say, this new rule will seriously hinder your vendor’s ability to inexpensively host web sites or otherwise develop applications w/ NWMLS listings on them.

Sometimes, I got to wonder what are the jive turkeys at the NWMLS are thinking? So now I either have to eat an unwanted (and probably unnecessary) cost or I have to pass on the increase in my costs to my customers? Neither scenario really appeals to me (and probably won’t appeal to my customers either). I’d rather increase my costs by buying more servers, going to Inman SF Connect, buying iPhone app development tools & books, or anything else that would ultimately improve end-user satisfaction with the applications I build. But now I have to pay a tax for merely trying to serve my clients? Gee, it isn’t like developing an Evernet XML download is already as much fun us as doing my taxes is.

My clients are hard working real estate professionals; they are not professional software engineers. They know about as much about creating Zillow XML feeds or developing Real Estate based Google Maps mash-up as I know about selling a home with a troublesome neighbor or if a property is next to a graveyard, does it lower the value because it’s creepy, or does it raise the value cause it’s quiet? Unfortunately, the nature of the world today requires real estate professionals partnering with vendors and/or consultants because real estate consumers increasingly demand high tech services from their agents & brokers and you can’t provide that service without high tech experts working on your behalf.

I’m not opposed to higher taxes if I know it’s going for a good cause. But what is this extra $30/month per agreement going to buy me or my clients? Is the NWMLS going to buy faster servers? Hire more IDX support staff? Throw a big party and spend the money on booze and strippers? Is the NWMLS running in the red and needs a bailout? Seriously, I’d like to know what I’m about to pay for.

Also, wouldn’t it make more sense to charge per vendor instead of per agreement? A vendor needs the same amount of NWMLS IT resources regardless if they serve only one member or ten members. Typically, a vendor only downloads the NWMLS data once, and uses the same copy of the NWMLS database for all their clients. It’s not like a vendor who has 10 clients incurs 10 times the CPU & bandwidth costs that a smaller vendor does.

Allowing multiple feed per broker could encourage more competition between vendors, but increasing vendor costs certainly won’t make things cheaper for members in the long run.

I can see a future phone call from the NWMLS enforcement division going something like this…

I know what you’re thinking, punk. You’re thinking “Did I sign six download agreements or only five?” Well to tell you the truth, in all this excitement I kind of lost track myself. But being as this is the NWMLS, the most powerful MLS in the greater Puget Sound area, and could blow your web site clean off, you’ve got to ask yourself one question: Do I feel lucky? Well, do ya, punk?

Has anybody else heard any details on these new policy changes? Do you know what the new IDX feed fees are for? Do you think the repeal of the download rule will help you? Do other MLS’s do this kind of thing? Why do I feel like I forgot my fortune cookie and it says I’m {bleep} out of luck?

A week ago, I decided to finally sign up for a

A week ago, I decided to finally sign up for a

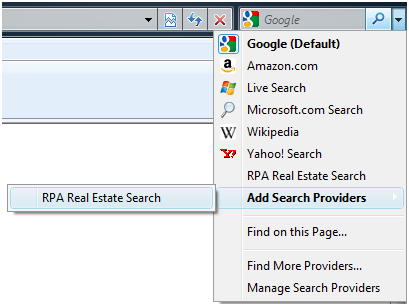

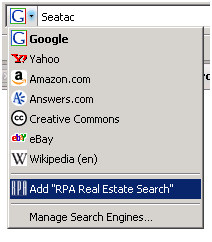

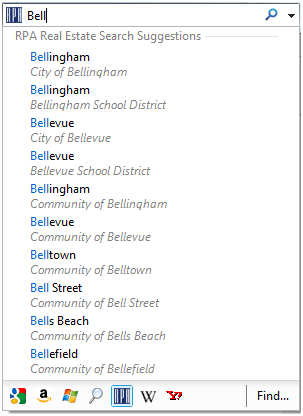

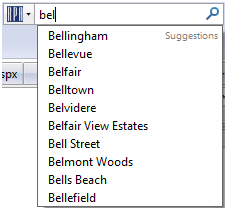

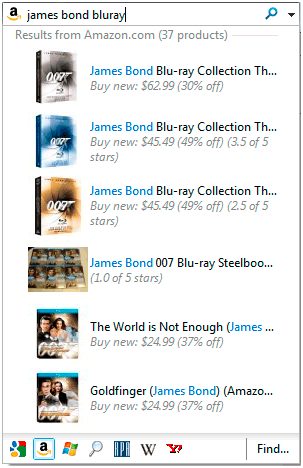

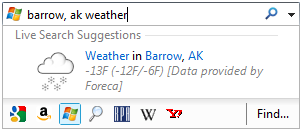

Call me crazy, but I think OpenSearch providers are going to become bigger than RSS feeds over the next year. If IE 8’s forth coming release doesn’t launch them into the mainstream, I think future releases of Firefox & Chrome will improve upon IE 8’s good ideas. Maybe you should think of it as browser favorites on steroids? If search is sticky, then OpenSearch is superglue and duct tape. If Firefox’s suggestions support were the tip of the iceberg, then IE 8’s implementation is cooler than Barrow, Alaska. The future of OpenSearch looks bright, even if it’s cold outside.

Call me crazy, but I think OpenSearch providers are going to become bigger than RSS feeds over the next year. If IE 8’s forth coming release doesn’t launch them into the mainstream, I think future releases of Firefox & Chrome will improve upon IE 8’s good ideas. Maybe you should think of it as browser favorites on steroids? If search is sticky, then OpenSearch is superglue and duct tape. If Firefox’s suggestions support were the tip of the iceberg, then IE 8’s implementation is cooler than Barrow, Alaska. The future of OpenSearch looks bright, even if it’s cold outside. I’ve been way too busy at

I’ve been way too busy at  The yellow brick road heading into the

The yellow brick road heading into the

It was a warm & lovely summer evening… Our hapless hero goes through his nightly ritual of sorting the junk mail from the bills when stumbles upon his annual “Official property value notice” post card from the

It was a warm & lovely summer evening… Our hapless hero goes through his nightly ritual of sorting the junk mail from the bills when stumbles upon his annual “Official property value notice” post card from the  Sometimes, you find something in your own back yard that’s an unexpected & pleasant surprise. Like that hole in the wall

Sometimes, you find something in your own back yard that’s an unexpected & pleasant surprise. Like that hole in the wall

In Seattle, the real estate technology scene is pretty crowded. There’s the big 3:

In Seattle, the real estate technology scene is pretty crowded. There’s the big 3:  Technologically speaking, they have some very compelling technology under development and a

Technologically speaking, they have some very compelling technology under development and a